How Streamlining AI with Containers and Kubernetes Revolutionizes Deployment

Artificial intelligence is propelling IT resource consumption to staggering new heights. As data center vacancies reach record lows, AI-driven demand for computing power is doubling every 100 days. By 2025, researchers predict 40% of professional services spend will involve generative AI.

Businesses are rapidly pushing toward workflow efficiency—but without resource efficiency, they could face limitations in their path to sustainable growth. Achieving market leadership will require proactive measures; organizations must prioritize the holistic optimization of AI and machine learning workloads, particularly at the deployment stage.

Learn why IT industry leaders, including OpenAI, are turning to containers and Kubernetes to streamline artificial intelligence and accelerate application modernization.

How Containers Support AI Deployment

AI deployment commonly involves complex dependencies, including precise frameworks and libraries such as TensorFlow and PyTorch. As a result, the transition from development to production can be technically challenging, with even small mismatches creating everything from minor slowdowns to significant delays in integration.

Containerization addresses these dependencies by encapsulating the application, along with all its dependencies, in a single, isolated package. This highly portable “container” includes everything needed to run the AI tool—regardless of operating system. IT teams can seamlessly transfer applications between environments, such as testing and production, without compatibility issues or unexpected bugs.

Leveraging containers can also reduce the clunkiness of AI workloads. By sharing the host system’s kernel, these lightweight packages reduce the demand for hardware amid transformation. This benefit could be transformational as AI infrastructure costs continue to rise.

The capabilities of containers extend well beyond these examples. Through the implementation of an orchestration tool, you can further harness their power and unlock truly continuous delivery.

Strengthening Container Scalability & Resiliency with Kubernetes

Kubernetes, an open-source orchestration system, amplifies the benefits of containerization through automation. It reduces the manual processes involved in deploying, scaling, and monitoring containers—ultimately streamlining AI and driving holistic efficiency across the container lifecycle. This is made possible by a handful of innovative features, including the following.

1. Autoscaling

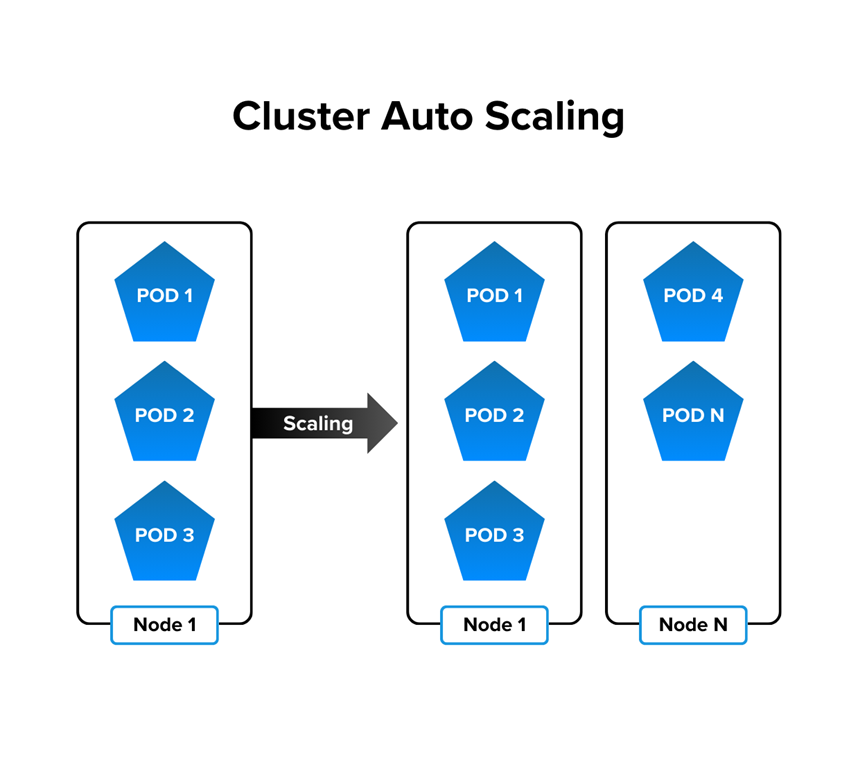

As AI workloads rise and fall, Kubernetes instantly increases or decreases the number of in-use nodes, which can be physical or virtual machines. It may also replicate or consolidate pods—units storing containers that share storage and network resources—according to application demand.

Naturally, this optimizes computational resource utilization, allowing AI models to adapt to different loads with minimal waste and maximized performance. Organizations can handle the large-scale training and processing needs of AI without overprovisioning hardware or incurring unnecessary costs.

2. Self-Healing

Containerization certainly cannot eliminate all AI deployment challenges. However, Kubernetes can reduce the impact of potential issues by detecting and resolving problems without disrupting workflows. When a crash occurs, Kubernetes may:

- Restart the container

- Replace the container

- Disable or hide the container from end users until the issue is resolved (in rare cases)

This continuity keeps AI models running smoothly, even under heavy loads. As a result, AI systems grow more reliable for faster, more consistent results.

3. Rolling Updates

When developers are continuously deploying new versions of applications, Kubernetes rolling updates are invaluable. This feature gradually replaces existing pods, which remain in use until new ones become available.

The benefits of this feature can be likened to the outcomes of using the Strangler pattern in application modernization; no downtime occurs for the end user—again, supporting continuous delivery—and business productivity is minimally affected.

Accelerating Deployments with Containers as a Service

Kubernetes can empower businesses with outstanding efficiency and scalability, but it can come with a steep learning curve. However, IT professionals who are not already proficient in its architecture can still reap the benefits of the container orchestration platform through a Containers as a Service (CaaS) solution.

CaaS+ by Capstone IT Solutions, for example, is a fully managed option that supports Kubernetes autoscaling and faster deployment cycles. Additionally, it integrates VMware technology to establish a united security framework. With this turnkey solution, businesses can focus more on developing the applications and features, and less on the management of underlying infrastructure.

Optimize, simplify, and expedite AI deployments with containers. Get started on your journey with support from Capstone IT Solutions.

Contact Capstone IT Solutions